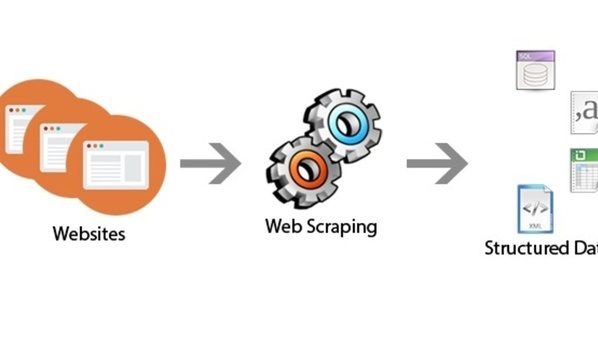

Billions of tourists discover the net each day and never all of them are human. Many are digital robots, programmed by code, that crawl the web and acquire details about all of the web sites in existence, together with their website pages and the info they could include.

That’s the way you get search outcomes whenever you use a search engine. Search engines like google and yahoo use these bots to document or index the textual content of internet sites, utilizing measurements of their algorithms to ship an inventory of pages every time you conduct a search.

Search engines like google and yahoo aren’t the one companies engaged on this apply. Some corporations extract publicly out there knowledge for their very own functions that embody acquiring intelligence for pricing and product methods, and knowledge evaluation.

Internet scraping is a billion-dollar enterprise

Many massive multi-billion greenback companies use net scraping each day as a core part of their operations. Some base their whole enterprise mannequin on it, and nearly each business makes use of net scraping to investigate each inner and exterior operations.

Search Engines

Firms like Yahoo!, Bing and Google are among the many unique net scraping companies. As talked about earlier, they use bots to crawl the net and index all of the content material with the intention to serve customers with probably the most related outcomes.

The ability of search engines like google and yahoo lies of their algorithms. By analyzing key phrases, backlinks (exterior hyperlinks pointing to net pages), and different elements contributing to authority, search engines like google and yahoo can rank web sites and show probably the most related hyperlinks to customers within the search engine outcomes pages.

Search Engine Optimization (website positioning) Platforms

The main points about how search engine algorithms work are primarily saved a secret. This has given rise to website positioning companies like Moz, SEMRush and Ahrefs that use net scraping to reverse engineer the method of how pages are ranked. These methods might not reveal the precise particulars of the algorithm, however they do enable these corporations to supply companies that assist companies enhance their total rating.

On-line Marketplaces

On-line marketplaces are search engines like google and yahoo that mixture product and repair listings from e-commerce operations. These embody web sites like Skyscanner or trivago, together with different companies like Google Procuring.

The ability of on-line marketplaces is huge as a result of they will mixture 1000’s of shops in a single place with the ability to ship the bottom costs. They use the identical methodology as search engines like google and yahoo to crawl the net to rank services which might be then delivered to customers in accordance with their search specs.

Can your online business use net scraping?

The reply, generally, is sure. If your online business is within the e-commerce area, net scraping is rapidly changing into a vital part of a advertising technique. Firms have two principal paths they will take to leverage net scraping that embody:

In-house net scraping

In-house net scraping takes your complete course of and internalizes it inside your organization. It requires a group of builders that may write custom-made knowledge extraction scripts to energy the bots that crawl the net.

Taking net scraping in-house will be resource-intensive and costly, nonetheless there are lots of advantages that embody precision customization capabilities and elevated troubleshooting pace.

Internet scraping will be complicated, and programmers can run into many roadblocks in the course of the course of. One of many principal points is having your IP deal with blocked by the goal web site’s server. It is because net scraping locations many requests on the server, and this could typically be confused with a DDoS (distributed denial of service assault).

Proxies are a necessary a part of the net scraping course of

Proxies can distribute requests and forestall server points. They act as third-party intermediaries that enable customers to route their requests by means of an inner server and stay nameless. A number of varieties embody knowledge heart and residential proxies, and the selection between them relies upon on the net scraping goal and goal web site.

Outsourced net scraping

There are lots of ready-to-use instruments out there available on the market that enable companies to acquire knowledge simply to allow them to allocate extra assets to evaluation. These options assist enterprises extract top quality knowledge and leverage world-class infrastructure whereas saving cash within the course of.